TFDS fornisce una raccolta di set di dati pronti per l'uso da utilizzare con TensorFlow, Jax e altri framework di Machine Learning.

Gestisce il download e la preparazione dei dati in modo deterministico e la costruzione di un tf.data.Dataset (o np.array ).

Visualizza su TensorFlow.org Visualizza su TensorFlow.org |  Esegui in Google Colab Esegui in Google Colab |  Visualizza l'origine su GitHub Visualizza l'origine su GitHub |  Scarica quaderno Scarica quaderno |

Installazione

TFDS esiste in due pacchetti:

-

pip install tensorflow-datasets: la versione stabile, rilasciata ogni pochi mesi. -

pip install tfds-nightly: rilasciato ogni giorno, contiene le ultime versioni dei set di dati.

Questa colab usa tfds-nightly :

pip install -q tfds-nightly tensorflow matplotlib

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import tensorflow_datasets as tfds

Trova i set di dati disponibili

Tutti i costruttori di set di dati sono sottoclassi di tfds.core.DatasetBuilder . Per ottenere l'elenco dei builder disponibili, usa tfds.list_builders() o guarda il nostro catalogo .

tfds.list_builders()

['abstract_reasoning', 'accentdb', 'aeslc', 'aflw2k3d', 'ag_news_subset', 'ai2_arc', 'ai2_arc_with_ir', 'amazon_us_reviews', 'anli', 'arc', 'asset', 'assin2', 'bair_robot_pushing_small', 'bccd', 'beans', 'bee_dataset', 'big_patent', 'bigearthnet', 'billsum', 'binarized_mnist', 'binary_alpha_digits', 'blimp', 'booksum', 'bool_q', 'c4', 'caltech101', 'caltech_birds2010', 'caltech_birds2011', 'cardiotox', 'cars196', 'cassava', 'cats_vs_dogs', 'celeb_a', 'celeb_a_hq', 'cfq', 'cherry_blossoms', 'chexpert', 'cifar10', 'cifar100', 'cifar10_1', 'cifar10_corrupted', 'citrus_leaves', 'cityscapes', 'civil_comments', 'clevr', 'clic', 'clinc_oos', 'cmaterdb', 'cnn_dailymail', 'coco', 'coco_captions', 'coil100', 'colorectal_histology', 'colorectal_histology_large', 'common_voice', 'coqa', 'cos_e', 'cosmos_qa', 'covid19', 'covid19sum', 'crema_d', 'cs_restaurants', 'curated_breast_imaging_ddsm', 'cycle_gan', 'd4rl_adroit_door', 'd4rl_adroit_hammer', 'd4rl_adroit_pen', 'd4rl_adroit_relocate', 'd4rl_antmaze', 'd4rl_mujoco_ant', 'd4rl_mujoco_halfcheetah', 'd4rl_mujoco_hopper', 'd4rl_mujoco_walker2d', 'dart', 'davis', 'deep_weeds', 'definite_pronoun_resolution', 'dementiabank', 'diabetic_retinopathy_detection', 'diamonds', 'div2k', 'dmlab', 'doc_nli', 'dolphin_number_word', 'domainnet', 'downsampled_imagenet', 'drop', 'dsprites', 'dtd', 'duke_ultrasound', 'e2e_cleaned', 'efron_morris75', 'emnist', 'eraser_multi_rc', 'esnli', 'eurosat', 'fashion_mnist', 'flic', 'flores', 'food101', 'forest_fires', 'fuss', 'gap', 'geirhos_conflict_stimuli', 'gem', 'genomics_ood', 'german_credit_numeric', 'gigaword', 'glue', 'goemotions', 'gov_report', 'gpt3', 'gref', 'groove', 'grounded_scan', 'gsm8k', 'gtzan', 'gtzan_music_speech', 'hellaswag', 'higgs', 'horses_or_humans', 'howell', 'i_naturalist2017', 'i_naturalist2018', 'imagenet2012', 'imagenet2012_corrupted', 'imagenet2012_multilabel', 'imagenet2012_real', 'imagenet2012_subset', 'imagenet_a', 'imagenet_lt', 'imagenet_r', 'imagenet_resized', 'imagenet_sketch', 'imagenet_v2', 'imagenette', 'imagewang', 'imdb_reviews', 'irc_disentanglement', 'iris', 'istella', 'kddcup99', 'kitti', 'kmnist', 'lambada', 'lfw', 'librispeech', 'librispeech_lm', 'libritts', 'ljspeech', 'lm1b', 'locomotion', 'lost_and_found', 'lsun', 'lvis', 'malaria', 'math_dataset', 'math_qa', 'mctaco', 'mlqa', 'mnist', 'mnist_corrupted', 'movie_lens', 'movie_rationales', 'movielens', 'moving_mnist', 'mslr_web', 'multi_news', 'multi_nli', 'multi_nli_mismatch', 'natural_questions', 'natural_questions_open', 'newsroom', 'nsynth', 'nyu_depth_v2', 'ogbg_molpcba', 'omniglot', 'open_images_challenge2019_detection', 'open_images_v4', 'openbookqa', 'opinion_abstracts', 'opinosis', 'opus', 'oxford_flowers102', 'oxford_iiit_pet', 'para_crawl', 'pass', 'patch_camelyon', 'paws_wiki', 'paws_x_wiki', 'penguins', 'pet_finder', 'pg19', 'piqa', 'places365_small', 'plant_leaves', 'plant_village', 'plantae_k', 'protein_net', 'qa4mre', 'qasc', 'quac', 'quality', 'quickdraw_bitmap', 'race', 'radon', 'reddit', 'reddit_disentanglement', 'reddit_tifu', 'ref_coco', 'resisc45', 'rlu_atari', 'rlu_atari_checkpoints', 'rlu_atari_checkpoints_ordered', 'rlu_dmlab_explore_object_rewards_few', 'rlu_dmlab_explore_object_rewards_many', 'rlu_dmlab_rooms_select_nonmatching_object', 'rlu_dmlab_rooms_watermaze', 'rlu_dmlab_seekavoid_arena01', 'rlu_rwrl', 'robomimic_ph', 'robonet', 'robosuite_panda_pick_place_can', 'rock_paper_scissors', 'rock_you', 's3o4d', 'salient_span_wikipedia', 'samsum', 'savee', 'scan', 'scene_parse150', 'schema_guided_dialogue', 'scicite', 'scientific_papers', 'scrolls', 'sentiment140', 'shapes3d', 'siscore', 'smallnorb', 'smartwatch_gestures', 'snli', 'so2sat', 'speech_commands', 'spoken_digit', 'squad', 'squad_question_generation', 'stanford_dogs', 'stanford_online_products', 'star_cfq', 'starcraft_video', 'stl10', 'story_cloze', 'summscreen', 'sun397', 'super_glue', 'svhn_cropped', 'symmetric_solids', 'tao', 'ted_hrlr_translate', 'ted_multi_translate', 'tedlium', 'tf_flowers', 'the300w_lp', 'tiny_shakespeare', 'titanic', 'trec', 'trivia_qa', 'tydi_qa', 'uc_merced', 'ucf101', 'vctk', 'visual_domain_decathlon', 'voc', 'voxceleb', 'voxforge', 'waymo_open_dataset', 'web_nlg', 'web_questions', 'wider_face', 'wiki40b', 'wiki_auto', 'wiki_bio', 'wiki_table_questions', 'wiki_table_text', 'wikiann', 'wikihow', 'wikipedia', 'wikipedia_toxicity_subtypes', 'wine_quality', 'winogrande', 'wit', 'wit_kaggle', 'wmt13_translate', 'wmt14_translate', 'wmt15_translate', 'wmt16_translate', 'wmt17_translate', 'wmt18_translate', 'wmt19_translate', 'wmt_t2t_translate', 'wmt_translate', 'wordnet', 'wsc273', 'xnli', 'xquad', 'xsum', 'xtreme_pawsx', 'xtreme_xnli', 'yelp_polarity_reviews', 'yes_no', 'youtube_vis', 'huggingface:acronym_identification', 'huggingface:ade_corpus_v2', 'huggingface:adversarial_qa', 'huggingface:aeslc', 'huggingface:afrikaans_ner_corpus', 'huggingface:ag_news', 'huggingface:ai2_arc', 'huggingface:air_dialogue', 'huggingface:ajgt_twitter_ar', 'huggingface:allegro_reviews', 'huggingface:allocine', 'huggingface:alt', 'huggingface:amazon_polarity', 'huggingface:amazon_reviews_multi', 'huggingface:amazon_us_reviews', 'huggingface:ambig_qa', 'huggingface:americas_nli', 'huggingface:ami', 'huggingface:amttl', 'huggingface:anli', 'huggingface:app_reviews', 'huggingface:aqua_rat', 'huggingface:aquamuse', 'huggingface:ar_cov19', 'huggingface:ar_res_reviews', 'huggingface:ar_sarcasm', 'huggingface:arabic_billion_words', 'huggingface:arabic_pos_dialect', 'huggingface:arabic_speech_corpus', 'huggingface:arcd', 'huggingface:arsentd_lev', 'huggingface:art', 'huggingface:arxiv_dataset', 'huggingface:ascent_kb', 'huggingface:aslg_pc12', 'huggingface:asnq', 'huggingface:asset', 'huggingface:assin', 'huggingface:assin2', 'huggingface:atomic', 'huggingface:autshumato', 'huggingface:babi_qa', 'huggingface:banking77', 'huggingface:bbaw_egyptian', 'huggingface:bbc_hindi_nli', 'huggingface:bc2gm_corpus', 'huggingface:beans', 'huggingface:best2009', 'huggingface:bianet', 'huggingface:bible_para', 'huggingface:big_patent', 'huggingface:billsum', 'huggingface:bing_coronavirus_query_set', 'huggingface:biomrc', 'huggingface:biosses', 'huggingface:blbooksgenre', 'huggingface:blended_skill_talk', 'huggingface:blimp', 'huggingface:blog_authorship_corpus', 'huggingface:bn_hate_speech', 'huggingface:bookcorpus', 'huggingface:bookcorpusopen', 'huggingface:boolq', 'huggingface:bprec', 'huggingface:break_data', 'huggingface:brwac', 'huggingface:bsd_ja_en', 'huggingface:bswac', 'huggingface:c3', 'huggingface:c4', 'huggingface:cail2018', 'huggingface:caner', 'huggingface:capes', 'huggingface:casino', 'huggingface:catalonia_independence', 'huggingface:cats_vs_dogs', 'huggingface:cawac', 'huggingface:cbt', 'huggingface:cc100', 'huggingface:cc_news', 'huggingface:ccaligned_multilingual', 'huggingface:cdsc', 'huggingface:cdt', 'huggingface:cedr', 'huggingface:cfq', 'huggingface:chr_en', 'huggingface:cifar10', 'huggingface:cifar100', 'huggingface:circa', 'huggingface:civil_comments', 'huggingface:clickbait_news_bg', 'huggingface:climate_fever', 'huggingface:clinc_oos', 'huggingface:clue', 'huggingface:cmrc2018', 'huggingface:cmu_hinglish_dog', 'huggingface:cnn_dailymail', 'huggingface:coached_conv_pref', 'huggingface:coarse_discourse', 'huggingface:codah', 'huggingface:code_search_net', 'huggingface:code_x_glue_cc_clone_detection_big_clone_bench', 'huggingface:code_x_glue_cc_clone_detection_poj104', 'huggingface:code_x_glue_cc_cloze_testing_all', 'huggingface:code_x_glue_cc_cloze_testing_maxmin', 'huggingface:code_x_glue_cc_code_completion_line', 'huggingface:code_x_glue_cc_code_completion_token', 'huggingface:code_x_glue_cc_code_refinement', 'huggingface:code_x_glue_cc_code_to_code_trans', 'huggingface:code_x_glue_cc_defect_detection', 'huggingface:code_x_glue_ct_code_to_text', 'huggingface:code_x_glue_tc_nl_code_search_adv', 'huggingface:code_x_glue_tc_text_to_code', 'huggingface:code_x_glue_tt_text_to_text', 'huggingface:com_qa', 'huggingface:common_gen', 'huggingface:common_language', 'huggingface:common_voice', 'huggingface:commonsense_qa', 'huggingface:competition_math', 'huggingface:compguesswhat', 'huggingface:conceptnet5', 'huggingface:conll2000', 'huggingface:conll2002', 'huggingface:conll2003', 'huggingface:conllpp', 'huggingface:conv_ai', 'huggingface:conv_ai_2', 'huggingface:conv_ai_3', 'huggingface:conv_questions', 'huggingface:coqa', 'huggingface:cord19', 'huggingface:cornell_movie_dialog', 'huggingface:cos_e', 'huggingface:cosmos_qa', 'huggingface:counter', 'huggingface:covid_qa_castorini', 'huggingface:covid_qa_deepset', 'huggingface:covid_qa_ucsd', 'huggingface:covid_tweets_japanese', 'huggingface:covost2', 'huggingface:craigslist_bargains', 'huggingface:crawl_domain', 'huggingface:crd3', 'huggingface:crime_and_punish', 'huggingface:crows_pairs', 'huggingface:cryptonite', 'huggingface:cs_restaurants', 'huggingface:cuad', 'huggingface:curiosity_dialogs', 'huggingface:daily_dialog', 'huggingface:dane', 'huggingface:danish_political_comments', 'huggingface:dart', 'huggingface:datacommons_factcheck', 'huggingface:dbpedia_14', 'huggingface:dbrd', 'huggingface:deal_or_no_dialog', 'huggingface:definite_pronoun_resolution', 'huggingface:dengue_filipino', 'huggingface:dialog_re', 'huggingface:diplomacy_detection', 'huggingface:disaster_response_messages', 'huggingface:discofuse', 'huggingface:discovery', 'huggingface:disfl_qa', 'huggingface:doc2dial', 'huggingface:docred', 'huggingface:doqa', 'huggingface:dream', 'huggingface:drop', 'huggingface:duorc', 'huggingface:dutch_social', 'huggingface:dyk', 'huggingface:e2e_nlg', 'huggingface:e2e_nlg_cleaned', 'huggingface:ecb', 'huggingface:ecthr_cases', 'huggingface:eduge', 'huggingface:ehealth_kd', 'huggingface:eitb_parcc', 'huggingface:eli5', 'huggingface:eli5_category', 'huggingface:emea', 'huggingface:emo', 'huggingface:emotion', 'huggingface:emotone_ar', 'huggingface:empathetic_dialogues', 'huggingface:enriched_web_nlg', 'huggingface:eraser_multi_rc', 'huggingface:esnli', 'huggingface:eth_py150_open', 'huggingface:ethos', 'huggingface:eu_regulatory_ir', 'huggingface:eurlex', 'huggingface:euronews', 'huggingface:europa_eac_tm', 'huggingface:europa_ecdc_tm', 'huggingface:europarl_bilingual', 'huggingface:event2Mind', 'huggingface:evidence_infer_treatment', 'huggingface:exams', 'huggingface:factckbr', 'huggingface:fake_news_english', 'huggingface:fake_news_filipino', 'huggingface:farsi_news', 'huggingface:fashion_mnist', 'huggingface:fever', 'huggingface:few_rel', 'huggingface:financial_phrasebank', 'huggingface:finer', 'huggingface:flores', 'huggingface:flue', 'huggingface:food101', 'huggingface:fquad', 'huggingface:freebase_qa', 'huggingface:gap', 'huggingface:gem', 'huggingface:generated_reviews_enth', 'huggingface:generics_kb', 'huggingface:german_legal_entity_recognition', 'huggingface:germaner', 'huggingface:germeval_14', 'huggingface:giga_fren', 'huggingface:gigaword', 'huggingface:glucose', 'huggingface:glue', 'huggingface:gnad10', 'huggingface:go_emotions', 'huggingface:gooaq', 'huggingface:google_wellformed_query', 'huggingface:grail_qa', 'huggingface:great_code', 'huggingface:greek_legal_code', 'huggingface:guardian_authorship', 'huggingface:gutenberg_time', 'huggingface:hans', 'huggingface:hansards', 'huggingface:hard', 'huggingface:harem', 'huggingface:has_part', 'huggingface:hate_offensive', 'huggingface:hate_speech18', 'huggingface:hate_speech_filipino', 'huggingface:hate_speech_offensive', 'huggingface:hate_speech_pl', 'huggingface:hate_speech_portuguese', 'huggingface:hatexplain', 'huggingface:hausa_voa_ner', 'huggingface:hausa_voa_topics', 'huggingface:hda_nli_hindi', 'huggingface:head_qa', 'huggingface:health_fact', 'huggingface:hebrew_projectbenyehuda', 'huggingface:hebrew_sentiment', 'huggingface:hebrew_this_world', 'huggingface:hellaswag', 'huggingface:hendrycks_test', 'huggingface:hind_encorp', 'huggingface:hindi_discourse', 'huggingface:hippocorpus', 'huggingface:hkcancor', 'huggingface:hlgd', 'huggingface:hope_edi', 'huggingface:hotpot_qa', 'huggingface:hover', 'huggingface:hrenwac_para', 'huggingface:hrwac', 'huggingface:humicroedit', 'huggingface:hybrid_qa', 'huggingface:hyperpartisan_news_detection', 'huggingface:iapp_wiki_qa_squad', 'huggingface:id_clickbait', 'huggingface:id_liputan6', 'huggingface:id_nergrit_corpus', 'huggingface:id_newspapers_2018', 'huggingface:id_panl_bppt', 'huggingface:id_puisi', 'huggingface:igbo_english_machine_translation', 'huggingface:igbo_monolingual', 'huggingface:igbo_ner', 'huggingface:ilist', 'huggingface:imdb', 'huggingface:imdb_urdu_reviews', 'huggingface:imppres', 'huggingface:indic_glue', 'huggingface:indonli', 'huggingface:indonlu', 'huggingface:inquisitive_qg', 'huggingface:interpress_news_category_tr', 'huggingface:interpress_news_category_tr_lite', 'huggingface:irc_disentangle', 'huggingface:isixhosa_ner_corpus', 'huggingface:isizulu_ner_corpus', 'huggingface:iwslt2017', 'huggingface:jeopardy', 'huggingface:jfleg', 'huggingface:jigsaw_toxicity_pred', 'huggingface:jigsaw_unintended_bias', 'huggingface:jnlpba', 'huggingface:journalists_questions', 'huggingface:kan_hope', 'huggingface:kannada_news', 'huggingface:kd_conv', 'huggingface:kde4', 'huggingface:kelm', 'huggingface:kilt_tasks', 'huggingface:kilt_wikipedia', 'huggingface:kinnews_kirnews', 'huggingface:klue', 'huggingface:kor_3i4k', 'huggingface:kor_hate', 'huggingface:kor_ner', 'huggingface:kor_nli', 'huggingface:kor_nlu', 'huggingface:kor_qpair', 'huggingface:kor_sae', 'huggingface:kor_sarcasm', 'huggingface:labr', 'huggingface:lama', 'huggingface:lambada', 'huggingface:large_spanish_corpus', 'huggingface:laroseda', 'huggingface:lc_quad', 'huggingface:lener_br', 'huggingface:lex_glue', 'huggingface:liar', 'huggingface:librispeech_asr', 'huggingface:librispeech_lm', 'huggingface:limit', 'huggingface:lince', 'huggingface:linnaeus', 'huggingface:liveqa', 'huggingface:lj_speech', 'huggingface:lm1b', 'huggingface:lst20', 'huggingface:m_lama', 'huggingface:mac_morpho', 'huggingface:makhzan', 'huggingface:masakhaner', 'huggingface:math_dataset', 'huggingface:math_qa', 'huggingface:matinf', 'huggingface:mbpp', 'huggingface:mc4', 'huggingface:mc_taco', 'huggingface:md_gender_bias', 'huggingface:mdd', 'huggingface:med_hop', 'huggingface:medal', 'huggingface:medical_dialog', 'huggingface:medical_questions_pairs', 'huggingface:menyo20k_mt', 'huggingface:meta_woz', 'huggingface:metooma', 'huggingface:metrec', 'huggingface:miam', 'huggingface:mkb', 'huggingface:mkqa', 'huggingface:mlqa', 'huggingface:mlsum', 'huggingface:mnist', 'huggingface:mocha', 'huggingface:moroco', 'huggingface:movie_rationales', 'huggingface:mrqa', 'huggingface:ms_marco', 'huggingface:ms_terms', 'huggingface:msr_genomics_kbcomp', 'huggingface:msr_sqa', 'huggingface:msr_text_compression', 'huggingface:msr_zhen_translation_parity', 'huggingface:msra_ner', 'huggingface:mt_eng_vietnamese', 'huggingface:muchocine', 'huggingface:multi_booked', 'huggingface:multi_eurlex', 'huggingface:multi_news', 'huggingface:multi_nli', 'huggingface:multi_nli_mismatch', 'huggingface:multi_para_crawl', 'huggingface:multi_re_qa', 'huggingface:multi_woz_v22', 'huggingface:multi_x_science_sum', 'huggingface:multidoc2dial', 'huggingface:multilingual_librispeech', 'huggingface:mutual_friends', 'huggingface:mwsc', 'huggingface:myanmar_news', 'huggingface:narrativeqa', 'huggingface:narrativeqa_manual', 'huggingface:natural_questions', 'huggingface:ncbi_disease', 'huggingface:nchlt', 'huggingface:ncslgr', 'huggingface:nell', 'huggingface:neural_code_search', 'huggingface:news_commentary', 'huggingface:newsgroup', 'huggingface:newsph', 'huggingface:newsph_nli', 'huggingface:newspop', 'huggingface:newsqa', 'huggingface:newsroom', 'huggingface:nkjp-ner', 'huggingface:nli_tr', 'huggingface:nlu_evaluation_data', 'huggingface:norec', 'huggingface:norne', 'huggingface:norwegian_ner', 'huggingface:nq_open', 'huggingface:nsmc', 'huggingface:numer_sense', 'huggingface:numeric_fused_head', 'huggingface:oclar', 'huggingface:offcombr', 'huggingface:offenseval2020_tr', 'huggingface:offenseval_dravidian', 'huggingface:ofis_publik', 'huggingface:ohsumed', 'huggingface:ollie', 'huggingface:omp', 'huggingface:onestop_english', 'huggingface:onestop_qa', 'huggingface:open_subtitles', 'huggingface:openai_humaneval', 'huggingface:openbookqa', 'huggingface:openslr', 'huggingface:openwebtext', 'huggingface:opinosis', 'huggingface:opus100', 'huggingface:opus_books', 'huggingface:opus_dgt', 'huggingface:opus_dogc', 'huggingface:opus_elhuyar', 'huggingface:opus_euconst', 'huggingface:opus_finlex', 'huggingface:opus_fiskmo', 'huggingface:opus_gnome', 'huggingface:opus_infopankki', 'huggingface:opus_memat', 'huggingface:opus_montenegrinsubs', 'huggingface:opus_openoffice', 'huggingface:opus_paracrawl', 'huggingface:opus_rf', 'huggingface:opus_tedtalks', 'huggingface:opus_ubuntu', 'huggingface:opus_wikipedia', 'huggingface:opus_xhosanavy', 'huggingface:orange_sum', 'huggingface:oscar', 'huggingface:para_crawl', 'huggingface:para_pat', 'huggingface:parsinlu_reading_comprehension', 'huggingface:paws', 'huggingface:paws-x', 'huggingface:pec', 'huggingface:peer_read', 'huggingface:peoples_daily_ner', 'huggingface:per_sent', 'huggingface:persian_ner', 'huggingface:pg19', 'huggingface:php', 'huggingface:piaf', 'huggingface:pib', 'huggingface:piqa', 'huggingface:pn_summary', 'huggingface:poem_sentiment', 'huggingface:polemo2', 'huggingface:poleval2019_cyberbullying', 'huggingface:poleval2019_mt', 'huggingface:polsum', 'huggingface:polyglot_ner', 'huggingface:prachathai67k', 'huggingface:pragmeval', 'huggingface:proto_qa', 'huggingface:psc', 'huggingface:ptb_text_only', 'huggingface:pubmed', 'huggingface:pubmed_qa', 'huggingface:py_ast', 'huggingface:qa4mre', 'huggingface:qa_srl', 'huggingface:qa_zre', 'huggingface:qangaroo', 'huggingface:qanta', 'huggingface:qasc', 'huggingface:qasper', 'huggingface:qed', 'huggingface:qed_amara', 'huggingface:quac', 'huggingface:quail', 'huggingface:quarel', 'huggingface:quartz', 'huggingface:quora', 'huggingface:quoref', 'huggingface:race', 'huggingface:re_dial', 'huggingface:reasoning_bg', 'huggingface:recipe_nlg', 'huggingface:reclor', 'huggingface:reddit', 'huggingface:reddit_tifu', 'huggingface:refresd', 'huggingface:reuters21578', 'huggingface:riddle_sense', 'huggingface:ro_sent', 'huggingface:ro_sts', 'huggingface:ro_sts_parallel', 'huggingface:roman_urdu', 'huggingface:ronec', 'huggingface:ropes', 'huggingface:rotten_tomatoes', 'huggingface:russian_super_glue', 'huggingface:s2orc', 'huggingface:samsum', 'huggingface:sanskrit_classic', 'huggingface:saudinewsnet', 'huggingface:sberquad', 'huggingface:scan', 'huggingface:scb_mt_enth_2020', 'huggingface:schema_guided_dstc8', 'huggingface:scicite', 'huggingface:scielo', 'huggingface:scientific_papers', 'huggingface:scifact', 'huggingface:sciq', 'huggingface:scitail', 'huggingface:scitldr', 'huggingface:search_qa', 'huggingface:sede', 'huggingface:selqa', 'huggingface:sem_eval_2010_task_8', 'huggingface:sem_eval_2014_task_1', 'huggingface:sem_eval_2018_task_1', 'huggingface:sem_eval_2020_task_11', 'huggingface:sent_comp', 'huggingface:senti_lex', 'huggingface:senti_ws', 'huggingface:sentiment140', 'huggingface:sepedi_ner', 'huggingface:sesotho_ner_corpus', 'huggingface:setimes', 'huggingface:setswana_ner_corpus', 'huggingface:sharc', 'huggingface:sharc_modified', 'huggingface:sick', 'huggingface:silicone', 'huggingface:simple_questions_v2', 'huggingface:siswati_ner_corpus', 'huggingface:smartdata', 'huggingface:sms_spam', 'huggingface:snips_built_in_intents', 'huggingface:snli', 'huggingface:snow_simplified_japanese_corpus', 'huggingface:so_stacksample', 'huggingface:social_bias_frames', 'huggingface:social_i_qa', 'huggingface:sofc_materials_articles', 'huggingface:sogou_news', 'huggingface:spanish_billion_words', 'huggingface:spc', 'huggingface:species_800', 'huggingface:speech_commands', 'huggingface:spider', 'huggingface:squad', 'huggingface:squad_adversarial', 'huggingface:squad_es', 'huggingface:squad_it', 'huggingface:squad_kor_v1', 'huggingface:squad_kor_v2', 'huggingface:squad_v1_pt', 'huggingface:squad_v2', 'huggingface:squadshifts', 'huggingface:srwac', 'huggingface:sst', 'huggingface:stereoset', 'huggingface:story_cloze', 'huggingface:stsb_mt_sv', 'huggingface:stsb_multi_mt', 'huggingface:style_change_detection', 'huggingface:subjqa', 'huggingface:super_glue', 'huggingface:superb', 'huggingface:swag', 'huggingface:swahili', 'huggingface:swahili_news', 'huggingface:swda', 'huggingface:swedish_medical_ner', 'huggingface:swedish_ner_corpus', 'huggingface:swedish_reviews', 'huggingface:swiss_judgment_prediction', 'huggingface:tab_fact', 'huggingface:tamilmixsentiment', 'huggingface:tanzil', 'huggingface:tapaco', 'huggingface:tashkeela', 'huggingface:taskmaster1', 'huggingface:taskmaster2', 'huggingface:taskmaster3', 'huggingface:tatoeba', 'huggingface:ted_hrlr', 'huggingface:ted_iwlst2013', 'huggingface:ted_multi', 'huggingface:ted_talks_iwslt', 'huggingface:telugu_books', 'huggingface:telugu_news', 'huggingface:tep_en_fa_para', 'huggingface:thai_toxicity_tweet', 'huggingface:thainer', 'huggingface:thaiqa_squad', 'huggingface:thaisum', 'huggingface:the_pile', 'huggingface:the_pile_books3', 'huggingface:the_pile_openwebtext2', 'huggingface:the_pile_stack_exchange', 'huggingface:tilde_model', 'huggingface:time_dial', 'huggingface:times_of_india_news_headlines', 'huggingface:timit_asr', 'huggingface:tiny_shakespeare', 'huggingface:tlc', 'huggingface:tmu_gfm_dataset', 'huggingface:totto', 'huggingface:trec', 'huggingface:trivia_qa', 'huggingface:tsac', 'huggingface:ttc4900', 'huggingface:tunizi', 'huggingface:tuple_ie', 'huggingface:turk', 'huggingface:turkish_movie_sentiment', 'huggingface:turkish_ner', 'huggingface:turkish_product_reviews', 'huggingface:turkish_shrinked_ner', 'huggingface:turku_ner_corpus', 'huggingface:tweet_eval', 'huggingface:tweet_qa', 'huggingface:tweets_ar_en_parallel', 'huggingface:tweets_hate_speech_detection', 'huggingface:twi_text_c3', 'huggingface:twi_wordsim353', 'huggingface:tydiqa', 'huggingface:ubuntu_dialogs_corpus', 'huggingface:udhr', 'huggingface:um005', 'huggingface:un_ga', 'huggingface:un_multi', 'huggingface:un_pc', 'huggingface:universal_dependencies', 'huggingface:universal_morphologies', 'huggingface:urdu_fake_news', 'huggingface:urdu_sentiment_corpus', 'huggingface:vctk', 'huggingface:vivos', 'huggingface:web_nlg', 'huggingface:web_of_science', 'huggingface:web_questions', 'huggingface:weibo_ner', 'huggingface:wi_locness', 'huggingface:wiki40b', 'huggingface:wiki_asp', 'huggingface:wiki_atomic_edits', 'huggingface:wiki_auto', 'huggingface:wiki_bio', 'huggingface:wiki_dpr', 'huggingface:wiki_hop', 'huggingface:wiki_lingua', 'huggingface:wiki_movies', 'huggingface:wiki_qa', 'huggingface:wiki_qa_ar', 'huggingface:wiki_snippets', 'huggingface:wiki_source', 'huggingface:wiki_split', 'huggingface:wiki_summary', 'huggingface:wikiann', 'huggingface:wikicorpus', 'huggingface:wikihow', 'huggingface:wikipedia', 'huggingface:wikisql', 'huggingface:wikitext', 'huggingface:wikitext_tl39', 'huggingface:wili_2018', 'huggingface:wino_bias', 'huggingface:winograd_wsc', 'huggingface:winogrande', 'huggingface:wiqa', 'huggingface:wisesight1000', 'huggingface:wisesight_sentiment', ...]

Carica un set di dati

tfds.load

Il modo più semplice per caricare un set di dati è tfds.load . Lo farà:

- Scarica i dati e salvali come file

tfrecord. - Carica il

tfrecorde crea iltf.data.Dataset.

ds = tfds.load('mnist', split='train', shuffle_files=True)

assert isinstance(ds, tf.data.Dataset)

print(ds)

<_OptionsDataset element_spec={'image': TensorSpec(shape=(28, 28, 1), dtype=tf.uint8, name=None), 'label': TensorSpec(shape=(), dtype=tf.int64, name=None)}>

2022-02-07 04:07:40.542243: E tensorflow/stream_executor/cuda/cuda_driver.cc:271] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

Alcuni argomenti comuni:

-

split=: quale split leggere (ad es'train',['train', 'test'],'train[80%:]',...). Consulta la nostra guida alle API divise . -

shuffle_files=: controlla se mescolare i file tra ogni epoca (TFDS memorizza grandi set di dati in più file più piccoli). -

data_dir=: Posizione in cui viene salvato il set di dati (predefinito su~/tensorflow_datasets/) -

with_info=True: Restituiscetfds.core.DatasetInfocontenente i metadati del set di dati -

download=False: Disabilita il download

tfds.builder

tfds.load è un sottile wrapper attorno a tfds.core.DatasetBuilder . Puoi ottenere lo stesso output utilizzando l'API tfds.core.DatasetBuilder :

builder = tfds.builder('mnist')

# 1. Create the tfrecord files (no-op if already exists)

builder.download_and_prepare()

# 2. Load the `tf.data.Dataset`

ds = builder.as_dataset(split='train', shuffle_files=True)

print(ds)

<_OptionsDataset element_spec={'image': TensorSpec(shape=(28, 28, 1), dtype=tf.uint8, name=None), 'label': TensorSpec(shape=(), dtype=tf.int64, name=None)}>

tfds build CLI

Se desideri generare un set di dati specifico, puoi utilizzare la riga di comando tfds . Per esempio:

tfds build mnist

Consulta il documento per i flag disponibili.

Iterare su un set di dati

Come dict

Per impostazione predefinita, l'oggetto tf.data.Dataset contiene un dict di tf.Tensor s:

ds = tfds.load('mnist', split='train')

ds = ds.take(1) # Only take a single example

for example in ds: # example is `{'image': tf.Tensor, 'label': tf.Tensor}`

print(list(example.keys()))

image = example["image"]

label = example["label"]

print(image.shape, label)

['image', 'label'] (28, 28, 1) tf.Tensor(4, shape=(), dtype=int64) 2022-02-07 04:07:41.932638: W tensorflow/core/kernels/data/cache_dataset_ops.cc:768] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead.

Per scoprire i nomi e la struttura delle chiavi dict , guarda la documentazione del set di dati nel nostro catalogo . Ad esempio: documentazione mnist .

Come tupla ( as_supervised=True )

Usando as_supervised=True , puoi ottenere una tupla (features, label) invece per i set di dati supervisionati.

ds = tfds.load('mnist', split='train', as_supervised=True)

ds = ds.take(1)

for image, label in ds: # example is (image, label)

print(image.shape, label)

(28, 28, 1) tf.Tensor(4, shape=(), dtype=int64) 2022-02-07 04:07:42.593594: W tensorflow/core/kernels/data/cache_dataset_ops.cc:768] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead.

Come numpy ( tfds.as_numpy )

Usa tfds.as_numpy per convertire:

-

tf.Tensor->np.array -

tf.data.Dataset->Iterator[Tree[np.array]](Treepuò essere nidificato arbitrariamenteDict,Tuple)

ds = tfds.load('mnist', split='train', as_supervised=True)

ds = ds.take(1)

for image, label in tfds.as_numpy(ds):

print(type(image), type(label), label)

<class 'numpy.ndarray'> <class 'numpy.int64'> 4 2022-02-07 04:07:43.220027: W tensorflow/core/kernels/data/cache_dataset_ops.cc:768] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead.

Come batch tf.Tensor ( batch_size=-1 )

Usando batch_size=-1 , puoi caricare l'intero set di dati in un unico batch.

Questo può essere combinato con as_supervised=True e tfds.as_numpy per ottenere i dati come (np.array, np.array) :

image, label = tfds.as_numpy(tfds.load(

'mnist',

split='test',

batch_size=-1,

as_supervised=True,

))

print(type(image), image.shape)

<class 'numpy.ndarray'> (10000, 28, 28, 1)

Fai attenzione che il tuo set di dati possa stare in memoria e che tutti gli esempi abbiano la stessa forma.

Confronta i tuoi set di dati

Il benchmarking di un set di dati è una semplice chiamata tfds.benchmark su qualsiasi iterabile (ad esempio tf.data.Dataset , tfds.as_numpy ,...).

ds = tfds.load('mnist', split='train')

ds = ds.batch(32).prefetch(1)

tfds.benchmark(ds, batch_size=32)

tfds.benchmark(ds, batch_size=32) # Second epoch much faster due to auto-caching

************ Summary ************ Examples/sec (First included) 42295.82 ex/sec (total: 60000 ex, 1.42 sec) Examples/sec (First only) 131.50 ex/sec (total: 32 ex, 0.24 sec) Examples/sec (First excluded) 51026.08 ex/sec (total: 59968 ex, 1.18 sec) ************ Summary ************ Examples/sec (First included) 204278.25 ex/sec (total: 60000 ex, 0.29 sec) Examples/sec (First only) 1444.72 ex/sec (total: 32 ex, 0.02 sec) Examples/sec (First excluded) 220821.83 ex/sec (total: 59968 ex, 0.27 sec)

- Non dimenticare di normalizzare i risultati per dimensione batch con

batch_size=kwarg. - Nel riepilogo, il primo batch di riscaldamento è separato dagli altri per acquisire il tempo di configurazione aggiuntivo di

tf.data.Dataset(ad es. inizializzazione dei buffer,...). - Nota come la seconda iterazione sia molto più veloce grazie alla memorizzazione nella cache automatica di TFDS .

-

tfds.benchmarkrestituisce untfds.core.BenchmarkResultche può essere ispezionato per ulteriori analisi.

Costruisci una pipeline end-to-end

Per andare oltre, puoi guardare:

- Il nostro esempio Keras end-to-end per vedere una pipeline di formazione completa (con batching, shuffle,...).

- La nostra guida alle prestazioni per migliorare la velocità delle tue pipeline (suggerimento: usa

tfds.benchmark(ds)per confrontare i tuoi set di dati).

Visualizzazione

tfds.as_dataframe

Gli oggetti tf.data.Dataset possono essere convertiti in pandas.DataFrame con tfds.as_dataframe per essere visualizzati su Colab .

- Aggiungi

tfds.core.DatasetInfocome secondo argomento ditfds.as_dataframeper visualizzare immagini, audio, testi, video,... - Usa

ds.take(x)per visualizzare solo i primixesempi.pandas.DataFramecaricherà l'intero set di dati in memoria e può essere molto costoso da visualizzare.

ds, info = tfds.load('mnist', split='train', with_info=True)

tfds.as_dataframe(ds.take(4), info)

2022-02-07 04:07:47.001241: W tensorflow/core/kernels/data/cache_dataset_ops.cc:768] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead.

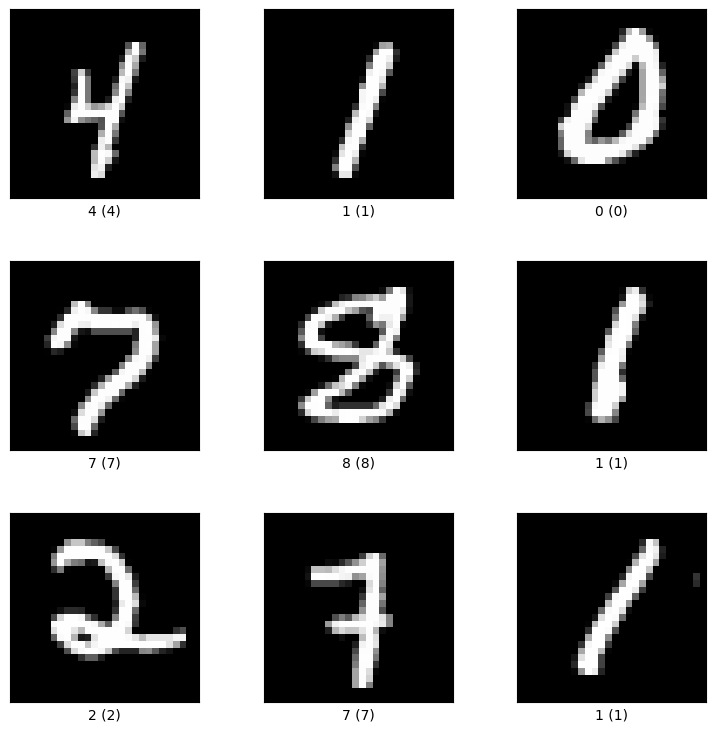

tfds.show_examples

tfds.show_examples restituisce un matplotlib.figure.Figure (ora sono supportati solo i set di dati di immagini):

ds, info = tfds.load('mnist', split='train', with_info=True)

fig = tfds.show_examples(ds, info)

2022-02-07 04:07:48.083706: W tensorflow/core/kernels/data/cache_dataset_ops.cc:768] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead.

Accedi ai metadati del set di dati

Tutti i builder includono un oggetto tfds.core.DatasetInfo contenente i metadati del set di dati.

Vi si accede tramite:

- L'API

tfds.load:

ds, info = tfds.load('mnist', with_info=True)

- L'API

tfds.core.DatasetBuilder:

builder = tfds.builder('mnist')

info = builder.info

Le informazioni sul set di dati contengono informazioni aggiuntive sul set di dati (versione, citazione, homepage, descrizione,...).

print(info)

tfds.core.DatasetInfo(

name='mnist',

full_name='mnist/3.0.1',

description="""

The MNIST database of handwritten digits.

""",

homepage='http://yann.lecun.com/exdb/mnist/',

data_path='gs://tensorflow-datasets/datasets/mnist/3.0.1',

download_size=11.06 MiB,

dataset_size=21.00 MiB,

features=FeaturesDict({

'image': Image(shape=(28, 28, 1), dtype=tf.uint8),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=10),

}),

supervised_keys=('image', 'label'),

disable_shuffling=False,

splits={

'test': <SplitInfo num_examples=10000, num_shards=1>,

'train': <SplitInfo num_examples=60000, num_shards=1>,

},

citation="""@article{lecun2010mnist,

title={MNIST handwritten digit database},

author={LeCun, Yann and Cortes, Corinna and Burges, CJ},

journal={ATT Labs [Online]. Available: http://yann.lecun.com/exdb/mnist},

volume={2},

year={2010}

}""",

)

Presenta i metadati (nomi delle etichette, forma dell'immagine,...)

Accedi a tfds.features.FeatureDict :

info.features

FeaturesDict({

'image': Image(shape=(28, 28, 1), dtype=tf.uint8),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=10),

})

Numero di classi, nomi di etichette:

print(info.features["label"].num_classes)

print(info.features["label"].names)

print(info.features["label"].int2str(7)) # Human readable version (8 -> 'cat')

print(info.features["label"].str2int('7'))

10 ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9'] 7 7

Forme, tipi d:

print(info.features.shape)

print(info.features.dtype)

print(info.features['image'].shape)

print(info.features['image'].dtype)

{'image': (28, 28, 1), 'label': ()}

{'image': tf.uint8, 'label': tf.int64}

(28, 28, 1)

<dtype: 'uint8'>

Dividi metadati (ad es. nomi divisi, numero di esempi,...)

Accedi a tfds.core.SplitDict :

print(info.splits)

{'test': <SplitInfo num_examples=10000, num_shards=1>, 'train': <SplitInfo num_examples=60000, num_shards=1>}

Spaccature disponibili:

print(list(info.splits.keys()))

['test', 'train']

Ottieni informazioni sulla divisione individuale:

print(info.splits['train'].num_examples)

print(info.splits['train'].filenames)

print(info.splits['train'].num_shards)

60000 ['gs://tensorflow-datasets/datasets/mnist/3.0.1/mnist-train.tfrecord-00000-of-00001'] 1

Funziona anche con l'API subsplit:

print(info.splits['train[15%:75%]'].num_examples)

print(info.splits['train[15%:75%]'].file_instructions)

36000 [FileInstruction(filename='gs://tensorflow-datasets/datasets/mnist/3.0.1/mnist-train.tfrecord-00000-of-00001', skip=9000, take=36000, num_examples=36000)]

Risoluzione dei problemi

Download manuale (se il download non riesce)

Se il download non riesce per qualche motivo (ad es. offline,...). Puoi sempre scaricare manualmente i dati da solo e inserirli nella manual_dir (predefinita ~/tensorflow_datasets/download/manual/ .

Per scoprire quali URL scaricare, guarda in:

Per i nuovi set di dati (implementati come cartella):

tensorflow_datasets/<type>/<dataset_name>/checksums.tsv. Ad esempio:tensorflow_datasets/text/bool_q/checksums.tsv.È possibile trovare la posizione dell'origine del set di dati nel nostro catalogo .

Per i vecchi set di dati:

tensorflow_datasets/url_checksums/<dataset_name>.txt

Correzione NonMatchingChecksumError

TFDS garantisce il determinismo convalidando i checksum degli URL scaricati. Se viene generato NonMatchingChecksumError , potrebbe indicare:

- Il sito Web potrebbe essere inattivo (ad es.

503 status code). Si prega di controllare l'URL. - Per gli URL di Google Drive, riprova più tardi poiché Drive a volte rifiuta i download quando troppe persone accedono allo stesso URL. Vedi bug

- I file dei set di dati originali potrebbero essere stati aggiornati. In questo caso, il generatore di set di dati TFDS dovrebbe essere aggiornato. Si prega di aprire un nuovo problema Github o PR:

- Registra i nuovi checksum con

tfds build --register_checksums - Eventualmente aggiornare il codice di generazione del set di dati.

- Aggiorna la

VERSIONdel set di dati - Aggiorna il set di dati

RELEASE_NOTES: cosa ha causato la modifica dei checksum? Alcuni esempi sono cambiati? - Assicurati che il set di dati possa ancora essere creato.

- Inviaci un PR

- Registra i nuovi checksum con

Citazione

Se stai utilizzando tensorflow-datasets per un documento, includi la seguente citazione, oltre a qualsiasi citazione specifica per i dataset utilizzati (che può essere trovata nel catalogo del dataset ).

@misc{TFDS,

title = { {TensorFlow Datasets}, A collection of ready-to-use datasets},

howpublished = {\url{https://www.tensorflow.org/datasets} },

}